The People vs Racism

Social media wasn’t the only villain in the UK riots, but its role in fuelling racism can’t be ignored.

The UK has been a scary place over the past few weeks. As a Muslim woman of colour I feared for my own safety as well as the safety of other black and brown people. When the far-right riots were reaching their crescendo, I hid in my flat.

For days I was too frightened to go on my daily runs, and only went out to the supermarket accompanied by friends.

I knew that civil unrest was inevitable following the spread of disinformation after the tragic killings in Southport. When you hear government ministers describe immigrants who look like you as “invaders” and a “swarm”, you know it’s only a matter of time before online words become offline actions.

I’m Dahaba Ali Hussen, author of the fifth issue of The People, a bi-weekly newsletter from People vs Big Tech and the Citizens. From racial bias in AI technologies (did anyone else see the AI-generated Sudanese Barbie holding a rifle?) to racist live facial recognition technology, I’m not short of content.

But considering recent events, I’m focusing on social media’s role in spreading racist hate and disinformation. Yes, we should be worried about Elon Musk’s X helping Tommy Robinson rack up hundreds of millions of views in the first days of the UK riots.

But it doesn’t stop there. All platforms are profiting from amplifying hate and hysteria, through algorithms that prioritise enraging and addictive content. It’s also impossible to separate social media from the influence of politicians and media who have been capitalising on populist, far-right narratives. Not to mention centuries of racial oppression in Britain.

Don't you know that you're toxic? ☠️🧪

“Expanding racist rhetoric is part of Big Tech’s core business,” Oyidiya Oji, a digital rights advisor for the European Network Against Racism, told me. She said the problem lies with the platforms’ “toxic business model.”

Oyidiya also believes social media companies are “one of the biggest machines contributing to polarisation,” from the speed at which they spread disinformation to the way they tailor the content on our feeds based on data-profiling.

As we explored in our polarisation issue, the algorithm erases more nuanced opinions, and feeds us stories it thinks we’ll like, trapping us in echo chambers which push us further apart. This benefits those seeking to cause chaos. Divisive issues like race and Israel/Palestine have been used by Russia to incite conflict in countries such as the US and France.

Tech expert Johnny Ryan reminds us that this is a data problem, not a speech problem. In a recent tweet he explained how it's algorithms, not us, that decide what content we see.

Oyidiya wants to see better content moderation from the platforms too. “There is a problem with who is deciding what kind of content is being removed,” she explained, adding that the platforms don’t have enough content moderators who have the knowledge of what racism is, nor do they do proper due diligence asking people from the most affected communities.

When it comes to moderation, platforms have promoted the idea that technologies are “race neutral”. But in reality “colour-blind” strategies can worsen systemic racism and algorithmic bias, especially when coupled with a lack of tailored efforts to counter the unique forms of hate speech they face online.

A many headed monster

The UK has a long and tiring history of racism and hate crimes. Historically, there was the slave trade and colonialism. Recently, Islamophobia fuelled by the war on terror, xenophobia in response to the migrant crisis, and the police – which was literally described as ‘institutionally racist’ by its own head earlier this year.

So we get it. We cannot name Big Tech companies as the architects of the UK riots - or similar violence elsewhere. In truth, Black Lives Matter wouldn’t have happened without them. Social media is essential for antiracist organising - just look at the thousands of counter-protesters who took to UK streets last week.

But we can confidently say that social media platforms host hateful, racist and often false content, amplified by their algorithms - while failing to adhere to their own policies regarding discriminatory content.

Anwar Akhtar, head of a London-based arts and education charity The Samosa which works with communities in culturally diverse areas, told me “fifteen or twenty years ago racism definitely existed but we wouldn’t see people being emboldened and having their racist rhetoric amplified on social media.”

He added that bad actors are “stirring up tensions between communities and exploiting vulnerable young people in some of the poorest communities in the UK.”

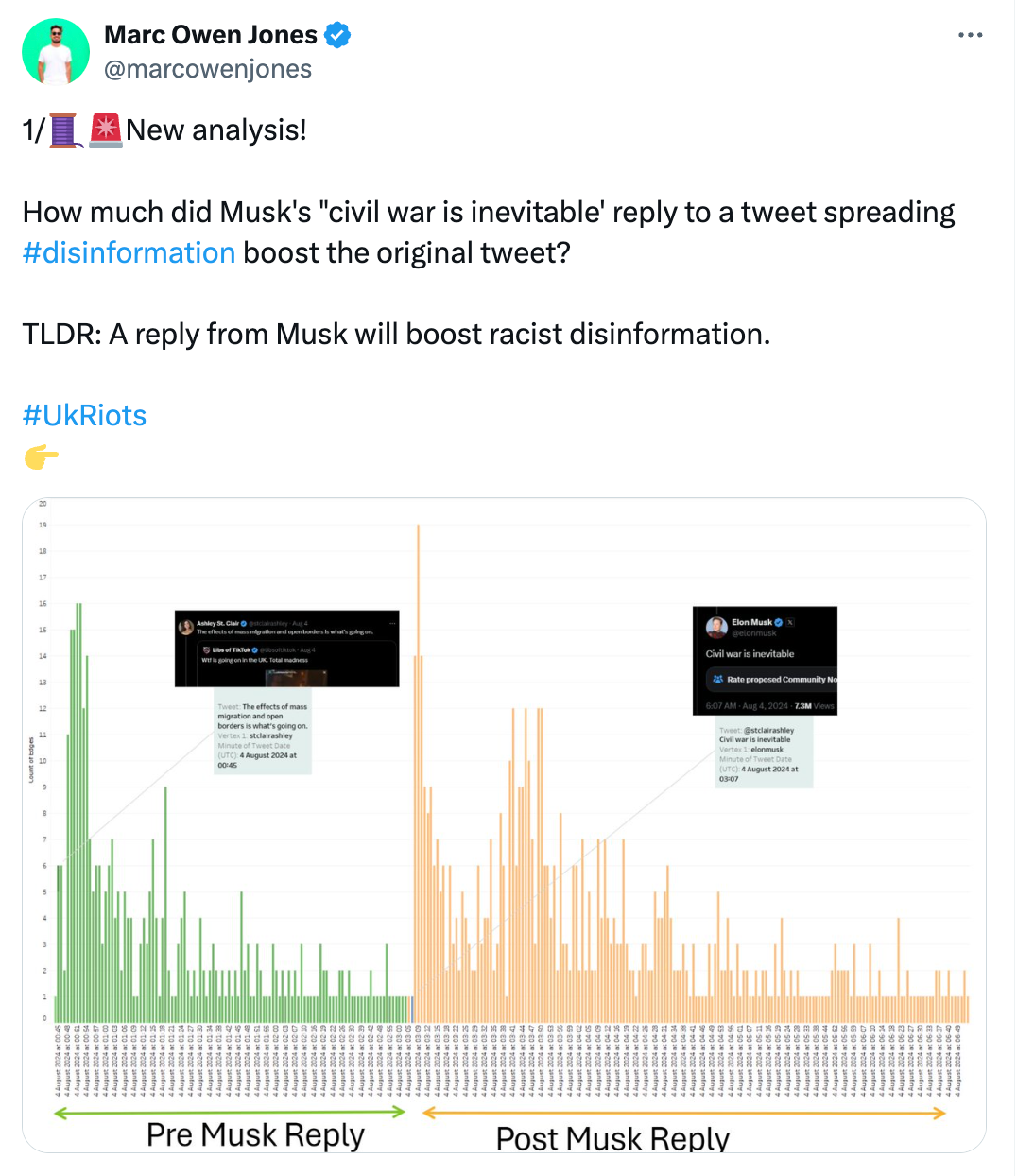

Disinformation expert Marc Owen Jones has been analysing how false and racist content has been spreading on X. Just a day after the incident in Southport, he tracked “at least 27 million impressions for posts stating or speculating that the attacker was Muslim, a migrant, refugee or foreigner.” He told us that while factors like economic stagnation and legacy media have contributed to the rise of the far right:

“We don’t necessarily need to class social media as a separate entity. We can see social media as a magnification of legacy media. It also gives hate speech entrepreneurs platforms, and it legitimises ideas that might have otherwise been taboo.”

Battling the beast ⚔️

Britain and the EU have recently passed laws aiming to prevent harmful activities online, but their effective enforcement is uncertain. For both the Digital Services Act and the Online Safety Act, it’s still early days.

We’re also seeing glimmers of hope following the EU’s Digital Markets Act, which has begun to legislate against the Big Tech monopoly. Tackling the dominance of the ‘Big Five’ tech companies feels even more pertinent following the UK riots and Elon Musk’s direct involvement on X, including amplifying far-right posts and disinformation to his nearly 200 million followers.

But while regulation is essential, when it comes to tackling racism and xenophobia Oyidiya wants action from the companies themselves. “It should be the task of social media platforms instead of civil servants who have less resources,” she said. Facebook already understands the problem. The platform’s own research found 64% of all extremist group joins are due to its recommendation tools.

Problem is, Big Tech benefits from division - so why would they change? The same question could be asked of governments.

Shakuntala Banaji, professor of media culture and social change at the London School of Economics has analysed short-form video clips as contributors to racial hatred in India, Myanmar, Brazil and the UK. She told me the graphic content only resulted in action when “the violence was legitimised…by members of ruling parties with support of social media.”

In other words: would one of the monster heads really kill one of its own?

Actions you can take ✊

- Don’t engage with harmful content online - not even sending it to your friend with a 😩 emoji. The more we engage, the more the algorithm promotes it.

- Report harmful content you see on social media platforms or make a complaint to your relevant government body (See here for the UK and here for the EU)

- Sign this petition calling on the UK govt to launch a public inquiry into the role of social media companies in amplifying hate and inciting the riots.

People vs Big Tech is a collective of more than one hundred tech justice organisations around the world. This means we put together each newsletter with the help of the smartest and most respected experts in this field. Email bigtechstories@the-citizens.com if you have an idea for a Big Tech issue you'd like us to cover.